AI is transforming the gaming industry by creating more immersive, engaging, and dynamic virtual worlds. Gaming projects use artificial intelligence to generate environments and characters that are more lifelike than ever before. This allows players to immerse themselves in virtual worlds that feel almost as real as the physical ones. One of the most significant ways in which AI is changing video games is by enhancing their realism through game development innovations. Moreover, AI algorithms can analyze and interpret data from various sources – such as player input, environmental factors, and real-world physics – to simulate realistic behaviors and interactions within the game world.

AI and Motion Animation

Have you ever thought about how your characters move/run/jump or play the ball in the game?

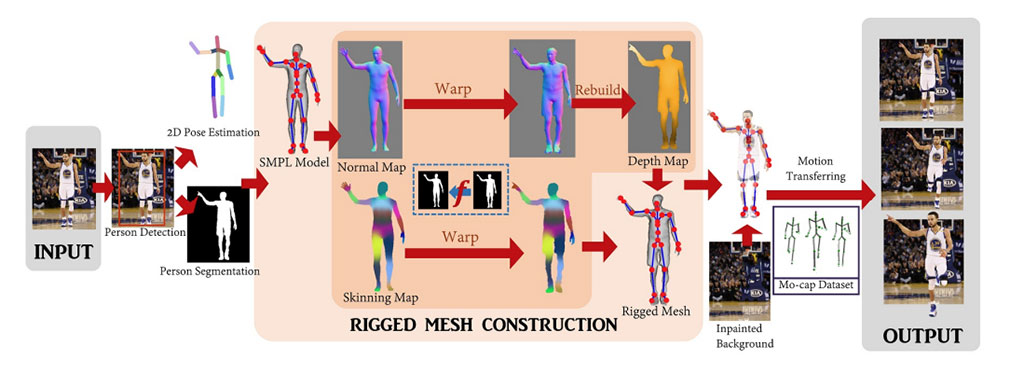

You may have noticed that sports game animations (let’s say FIFA or NBA) often look realistic yet repetitive because they use a limited set of fixed motions from a database. These motions are then played back in phases and the interactions between different motions, such as two players colliding/dribbling a ball, are calculated using physics-driven kinematics. In other words, the animations are created by recording a person performing the movements and then using that data to create a digital version of the movements.

Photo: NVIDIA Developer

However, the number of movements recorded is limited and the way that the movements are combined can sometimes lead to unrealistic results and glitches (check this pic below).

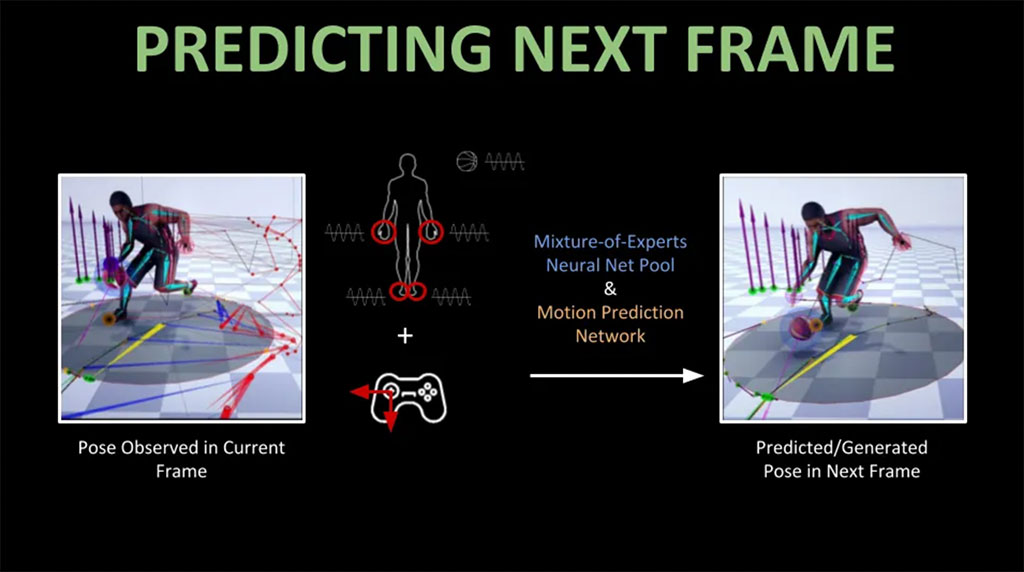

Motion animation with deep learning can address this problem: by utilizing the same motion-capture database used to create animations when following the approach described above, there’s an AI framework that uses local motion phases for predicting and generating movements. This generative model helps to avoid visually repetitive animations, thereby making the movements look more natural and dynamic. By modeling the motion of individual body parts rather than the entire body, this framework also makes interactions between different players more realistic and without having to hand-code the physics behind such interactions.

Photo: The Paper “Local Motion Phases for Learning Multi-Contact Character Movements”

So, character motion synthesis is the process of creating realistic and natural-looking movements for animated characters. One of the challenges in this task is learning the spatial-temporal structure of body movements. This means understanding how different parts of the body move together over time.

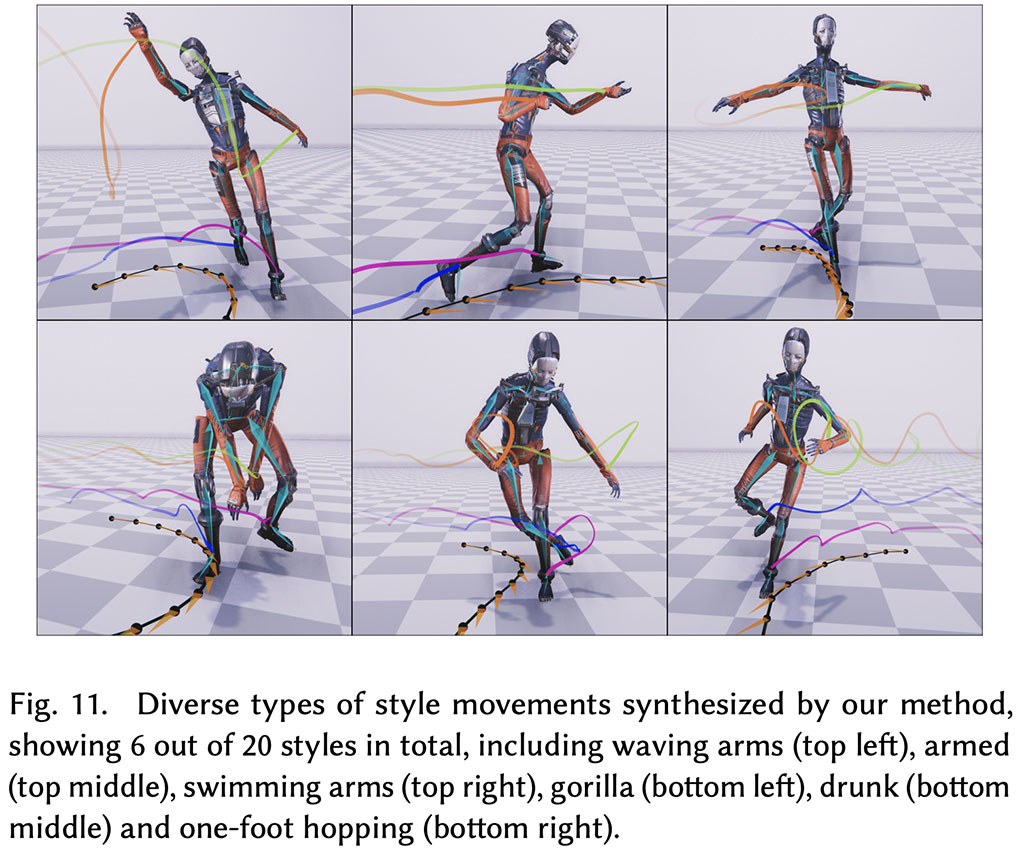

In the work “DeepPhase: Periodic Autoencoders for Learning Motion Phase Manifolds by Sebastian Starke, Ian Mason, Taku Komura“, the authors propose a new neural network architecture called the Periodic Autoencoder to learn periodic features from large unstructured motion datasets in an unsupervised manner. This means that the network learns how to identify and extract repeating patterns in the motion data without being explicitly told what to look for.

Photo: The Paper “Local Motion Phases for Learning Multi-Contact Character Movements”

The network decomposes character movements into multiple latent channels, each of which captures the non-linear periodicity of a different body segment. For example, one channel might capture the periodicity of a leg swing while walking, while another channel captures the periodicity of the arm swing.

Photo: The Paper “DeepPhase: Periodic Autoencoders for Learning Motion Phase Manifolds”

The network then extracts a multi-dimensional phase space from the full-body motion data. This phase space is a representation of the different ways the body segments can move together. It can be used to effectively cluster animations and produce a manifold in which computed feature distances provide better similarity measures than the original motion space does. This means that the phase space can be used to find animations that are similar to each other in terms of their spatial-temporal structure.

I suppose it sounds complicated. So, here’s a very simple analogy:

- Imagine you are trying to learn how to dance. One way to do this would be to watch a lot of videos of people dancing and try to copy their movements. However, this would be very time-consuming, difficult and you might not be able to capture all the movements’ nuances.

- Another way to learn how to dance would be to learn the basic steps of dance and then practice putting them together in different ways. This would be a more efficient way to learn, but it would require you having a good understanding of the underlying principles of dance.

The Periodic Autoencoder is similar to the second approach. It learns the basic principles of body movement from large, unstructured motion datasets. This allows it to generate realistic and natural-looking movements for animated characters without having to be explicitly told how to do so. I recommend you check out this great video: EA’s New AI: Next Level Gaming Animation.

What Else Can AI Do? Simulate Virtual Worlds, Of Course!

AI landscapes, how does that sound? I’d like to note that many people confuse machine learning algorithms with conventional generative algorithms. Yes, a lot of attention goes to the former kind but the latter also interests our team for applications when creating generative open-world locations in our SIDUS HEROES Metaverse.

Let’s be honest, there isn’t much effort going into simulating complex ecosystems for most virtual worlds; creators don’t really think about physics and biology-based ecosystems. They just throw in some trees and that’s it 😉

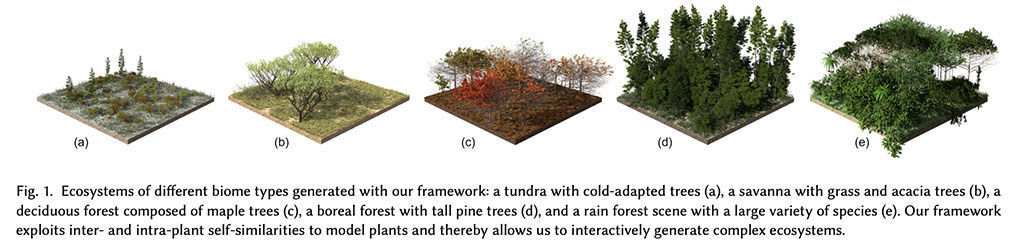

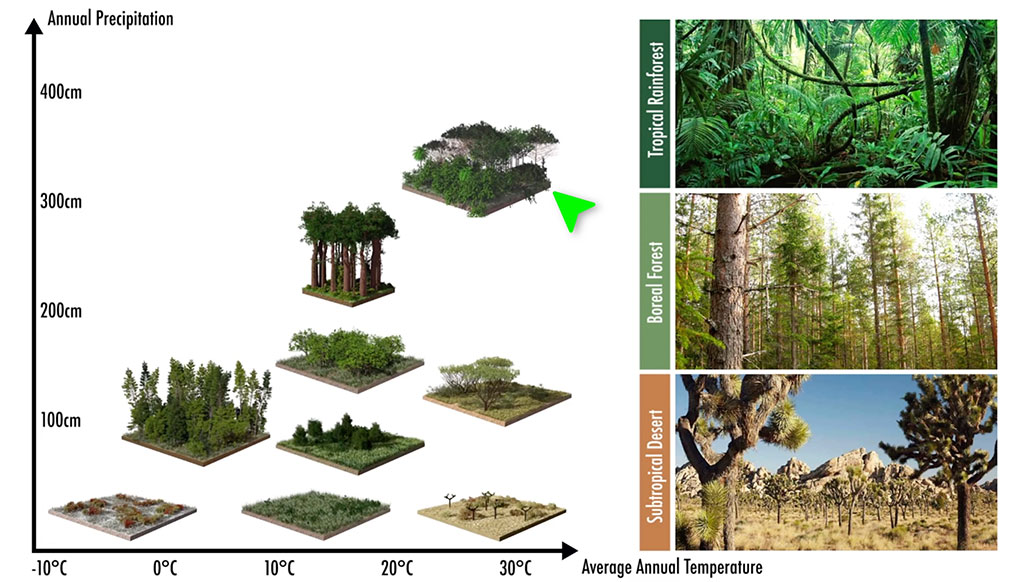

However, if we want virtual worlds to be more realistic, then things like the types of vegetation used should at least be determined by environmental factors such as precipitation and temperature.

Photo: The paper “Synthetic Silviculture: Multi-scale Modeling of Plant Ecosystems”

If there is no rain and it’s super cold, we get a tundra; with no rain and high temperature, we get a desert. If we keep the temperature high and add a ton of precipitation, we get a tropical rainforest (see pic below). And this technique promises to be capable of simulating all of these climates and everything in between!

Photo: The paper “Synthetic Silviculture: Multi-scale Modeling of Plant Ecosystems”

Just take a look at the beautiful little worlds below: they are not illustrations, they are the result of an existing AI simulation program.

Photo: The paper “Synthetic Silviculture: Multi-scale Modeling of Plant Ecosystems”

The approach in the paper “Synthetic Silviculture: Multi-scale Modeling of Plant Ecosystems” is based on leveraging ‘inter’ and ‘intra’ plant self-similarities, thereby efficiently modeling plant geometry. The authors focus on the interactive design of plant ecosystems for up to 500k plants, while adhering to biological priors known from forestry and botanical research. The parameter space introduced supports modeling the properties of nine distinct plant ecologies, each plant being represented as a 3D surface mesh. The capabilities of this framework are illustrated in numerous models of forests and individual plants.

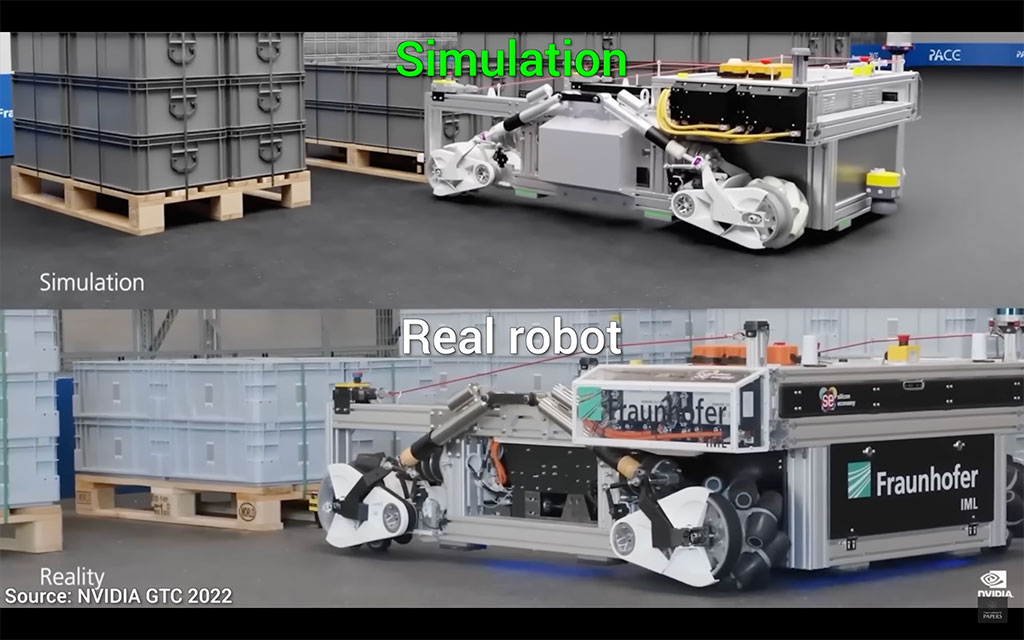

AI Helps to Speed Up Simulations

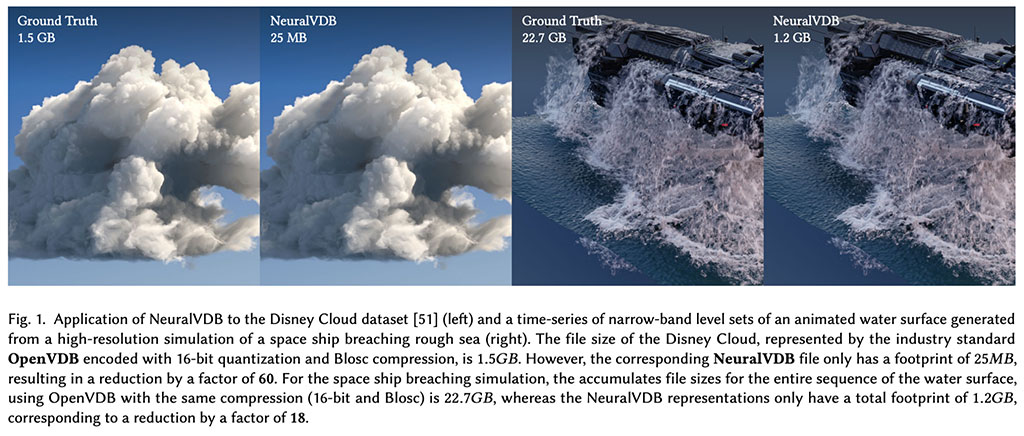

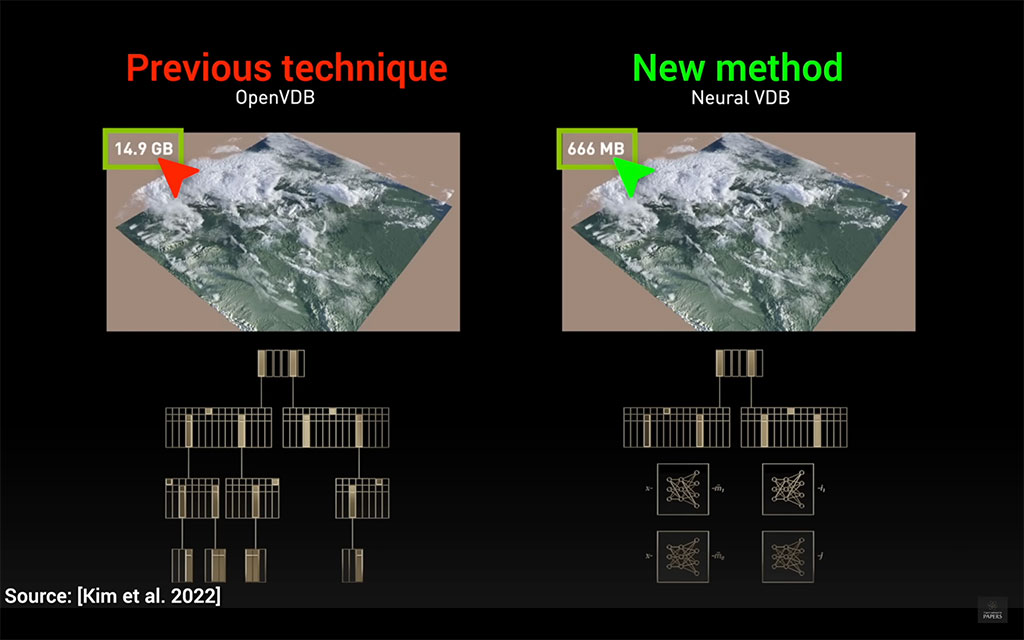

While building the SIDUS HEROES Metaverse, we’re actively implementing AI tools that allow us to speed up the simulation of dynamic objects. Some simulations can fill several hard drives for only a minute of physics simulation data – that’s literally an insane amount. So, we need something to help compress everything and the scientists at NVIDIA have proposed a crazy idea!

NVIDIA has developed a new AI-based technique called NeuralVDB, which can compress physics simulation data by up to 60 times, maintaining the same visual quality. This could have a major impact on a wide range of industries. It also offers significant efficiency improvements over OpenVDB, the industry-standard library for simulating and rendering sparse volumetric data such as water, fire, smoke and clouds.

Photo: The Paper «NeuralVDB: High-resolution Sparse Volume Representation using Hierarchical Neural Networks»

The OpenVDB solution is not just for hobby projects, it is used in many of the blockbuster feature-length movies you all know and love; movies that have won many Academy Awards. And, we are not done yet.

Here are some more potential applications of NeuralVDB:

- Creating realistic and immersive virtual worlds.

- Simulating complex physical phenomena, such as weather and climate change.

- Designing new products and manufacturing processes, leading to industrial visualizations.

- Improving scientific research and the accuracy of medical diagnostics and treatments.

NeuralVDB is still under development but it has the potential to revolutionize the way we simulate and visualize the world around us. I also highly recommend you watch this short video about the solution I discussed above.

AI Lets You Have Real Conversations with NPCs in Video Games

Photo: Replica Studios

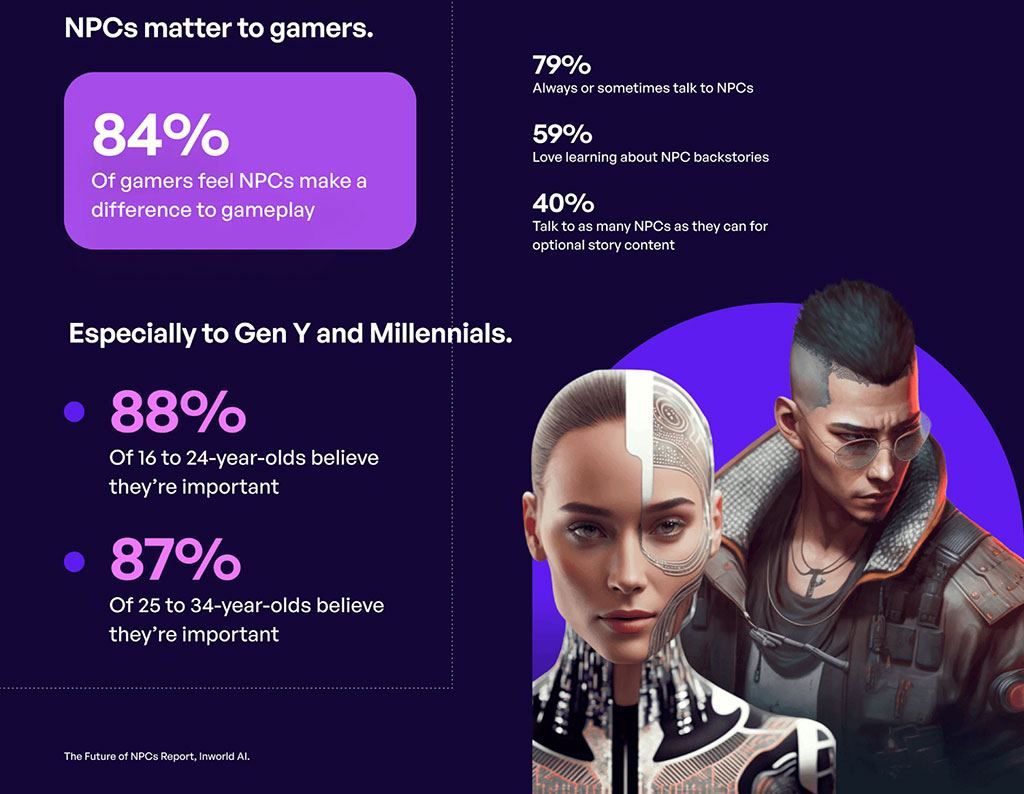

Non-player characters or NPCs are a major component of almost all mobile, PC or console games. They open up the game world, add to it and enhance the gameplay in a variety of ways. However, NPCs have lots of problems that developers are struggling with: e.g. secondary characters are often not very good at having conversations. They either make simple noises or repeat the same lines over and over again. This is because NPCs are typically programmed with a limited set of pre-recorded dialogue.

Photo: The Future of NPCs Report

According to The Future of NPCs Report conducted by Inworld AI, the majority of players believe that improving NPC behavior will definitely have a positive impact on gameplay enjoyment.

AI is changing this! Developers are using AI to create NPCs that can generate original, audible speech. This is done by combining GPT with text-to-speech technology. Some of these NPCs can even take players on new storylines.

You can catch a glimpse of this through a recently released demo from Replica Studios featuring a modified version of the game Matrix Awakens. It lets you use your own microphone to converse with NPCs on city streets. The demo offers a clunky yet fascinating glimpse into how voice AI could soon make video games far more immersive, allowing game studios to scale up the social ‘interactability’ of virtual worlds in a way that would be practically impossible with human voice actors.

Overall, AI is having a major impact on the gaming industry and its influence is only going to grow in the years to come. AI is being used to create more realistic and immersive game worlds, develop new gameplay mechanics and personalize the gaming experience for each player.

This is just the beginning of what AI can do for the gaming industry. As the technology continues to develop, we can expect to see even more innovative and groundbreaking AI applications in games.

Disclaimer: Coinspeaker is committed to providing unbiased and transparent reporting. This article aims to deliver accurate and timely information but should not be taken as financial or investment advice. Since market conditions can change rapidly, we encourage you to verify information on your own and consult with a professional before making any decisions based on this content.

CEO of SIDUSHEROES.COM and NFTSTARS.APP. International entrepreneur with a global vision for innovation. Software developer. Property developer in Australia. Angel investor.