Mercy Mutanya is a Tech enthusiast, Digital Marketer, Writer and IT Business Management Student. She enjoys reading, writing, doing crosswords and binge-watching her favourite TV series.

The company’s executives stated that it is possible to run such AI models on devices if the chips have enough processing speed and memory.

Edited by Julia Sakovich

Updated

3 mins read

Edited by Julia Sakovich

Updated

3 mins read

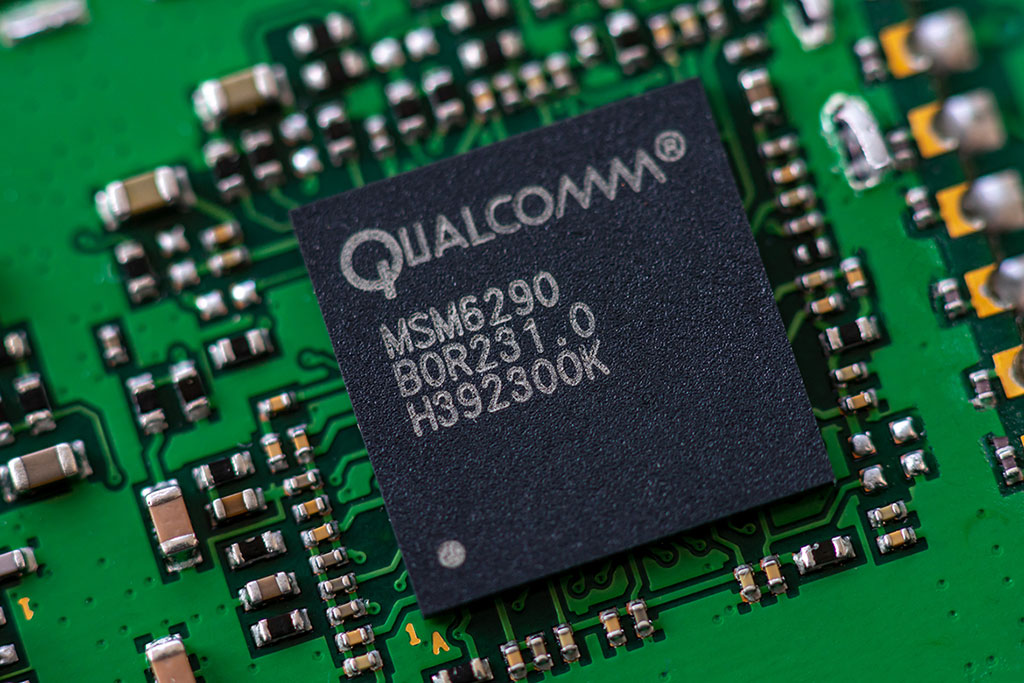

Qualcomm Inc (NASDAQ: QCOM) has unveiled two new chips that are designed to run AI software, including large language models (LLMs), without connecting to the internet. Generative AI applications such as OpenAI’s ChatGPT require a lot of processing power and have so far largely been run in the cloud on robust Nvidia Corp (NASDAQ: NVDA) graphics processors.

The new hardware includes Qualcomm’s X Elite chip for PCs and laptops and the Snapdragon Series 8 Gen 3 for high-end Android phones. Improved processing time for AI models in high-end Android phones from manufacturers such as Asus and Sony could create a completely new area of competition with Apple’s iPhones which continue to add new AI features.

Qualcomm’s latest Snapdragon chip has already proven to have marginally better processing speed than last year’s Gen 2 chip. Qualcomm’s Senior Vice President for Mobile, Alex Katouzian noted that last year’s processor could generate an image from a text prompt in 15 seconds while the new chip takes less than a second to carry out the same task.

“If someone would go to buy a phone today, they would say, how fast is the CPU, how much memory do I get on this thing? What does the camera look like?” the executive said, “Over the next two or three years, people are going to say, what kind of AI capability am I going to have on this?”

Qualcomm’s smartphone chips have included AI portions called NPUs since 2018 which have been used to improve photos and other features. The company revealed during Tuesday’s announcement that its smartphone chip can handle large LLMs used in generative AI of up to 10 billion parameters. Some of the largest models have many times more parameters. OpenAI’s GPT3, for example, has about 175 billion parameters.

The chipmaker’s executives stated that it is possible to run such AI models on devices if the chips have enough processing speed and memory. They added that running AI models on the device itself rather than in the cloud would be faster and more private.

The company, which is also developing its own AI models, believes that improved processing speed could open up a new set of use cases and applications such as personal voice assistance. A device’s personal voice assistant, could use a local AI model for simple queries and send more complex queries to a more robust computer in the cloud.

The latest Snapdragon Series 8 Gen 3 will feature in high-end Android devices from brands such as Asus, Sony, and OnePlus costing more than $500 beginning early next year.

Qualcomm’s new X Elite computer chip, the X is based on Arm and rivals Intel’s x86 chips. Laptops based on the chip and using what Qualcomm calls Oryon cores are projected to go on sale in the middle of next year. Qualcomm asserts that its chip performs better than Apple’s M2 Max chip while using less power.

Disclaimer: Coinspeaker is committed to providing unbiased and transparent reporting. This article aims to deliver accurate and timely information but should not be taken as financial or investment advice. Since market conditions can change rapidly, we encourage you to verify information on your own and consult with a professional before making any decisions based on this content.

Mercy Mutanya is a Tech enthusiast, Digital Marketer, Writer and IT Business Management Student. She enjoys reading, writing, doing crosswords and binge-watching her favourite TV series.